Can we turn the world itself into the interface for learning?

Abstract

We encounter the world through interfaces, but most of them flatten how knowledge is felt and understood.

Inspired by The Magic School Bus, I turn the physical world itself into an interactive interface, letting people learn by directly manipulating invisible principles like scale, time, and perception.

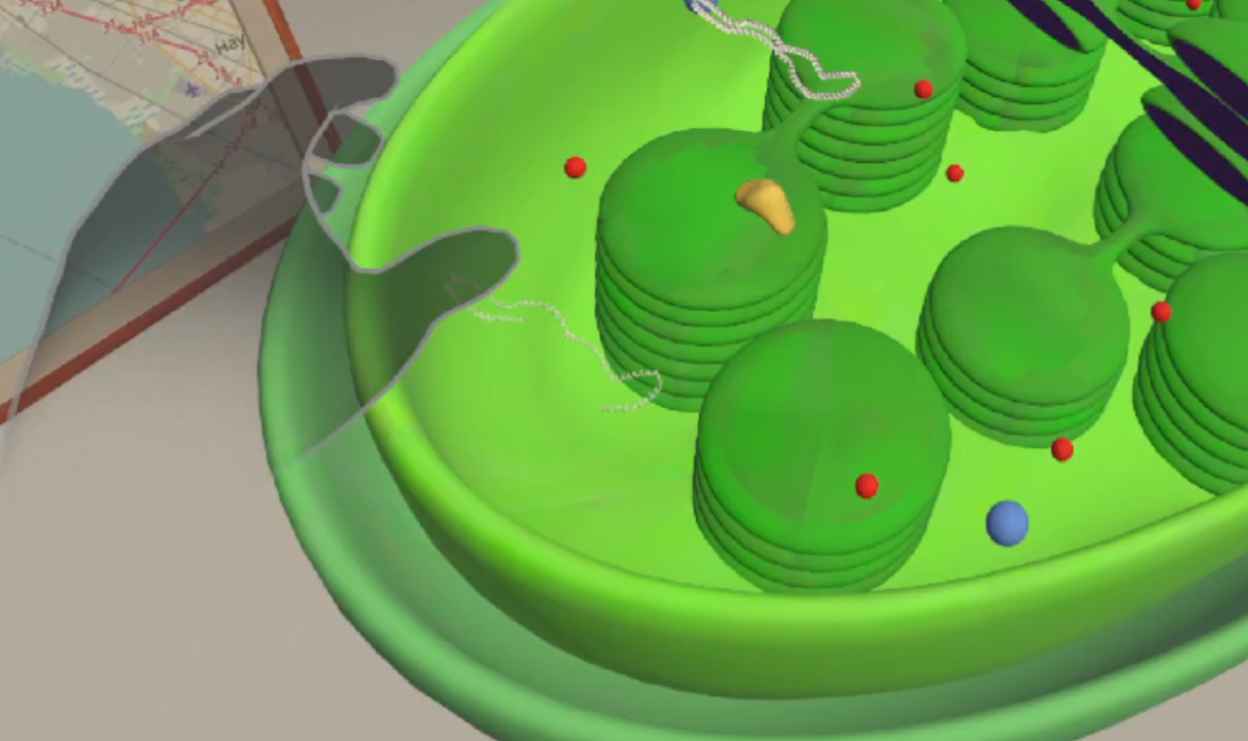

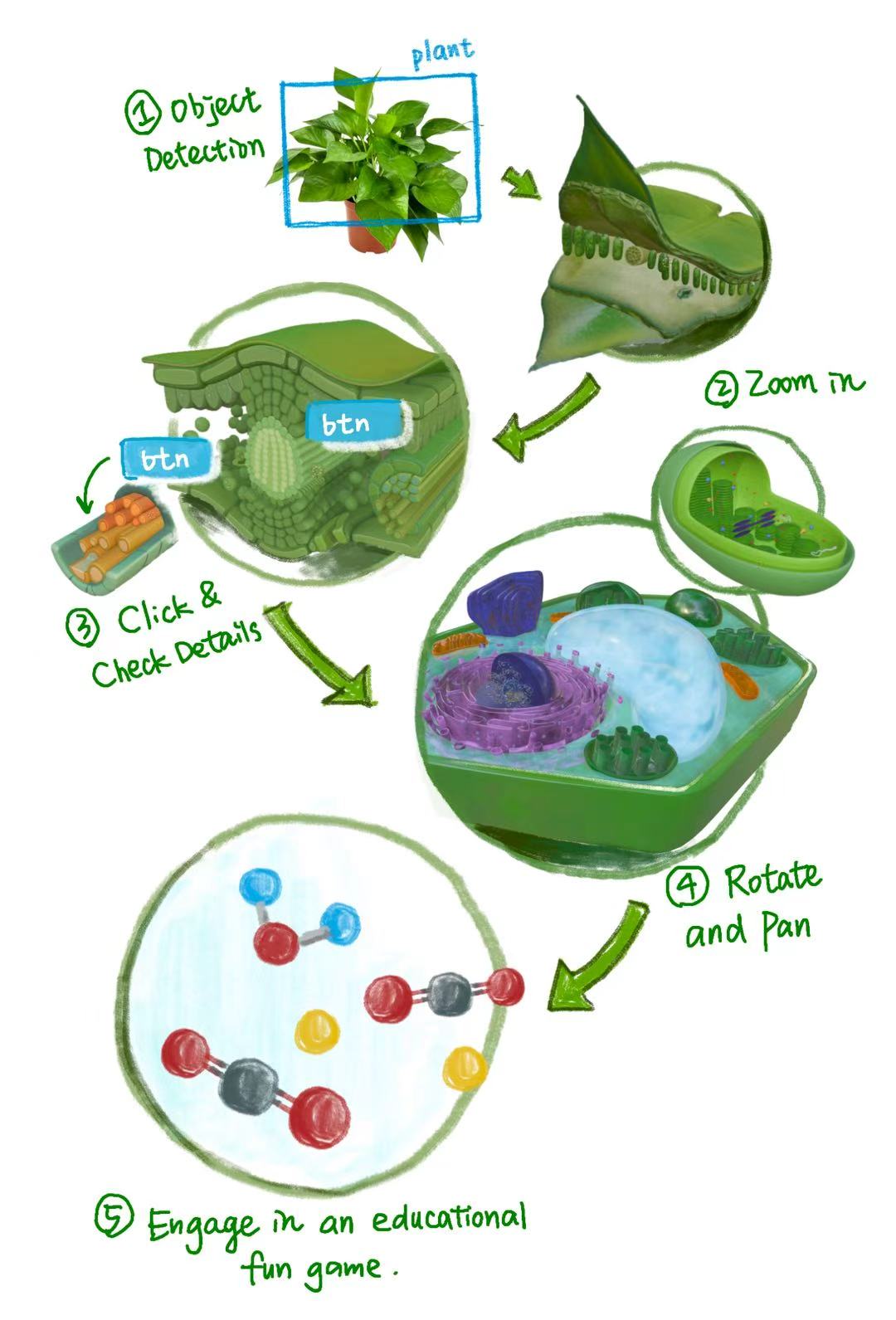

Invisible Worlds explores Extended Reality (especially, Mixed Reality) as a perspective-shifting educational tool, aiming to turn the physical world itself into a user interface. By utilizing Unity with Meta Quest SDK, the system overlays interactive layers onto real-world objects, allowing learners to experience phenomena normally beyond human physical limitation or perception. For example, zoom into a plant's inside down to the cells and even molecules to find out how photosynthesis works, or sense surrounding spaces mapped through bat-inspired echolocation.

The goal is simple: to make learning intuitive and fun.

Toolkit

Unity, Meta Quest SDK

Award

The initial prototype won:

Second Place & Most Business Viable

Unity × Rokid XR Hackathon, Shanghai, 2025

Video Demos

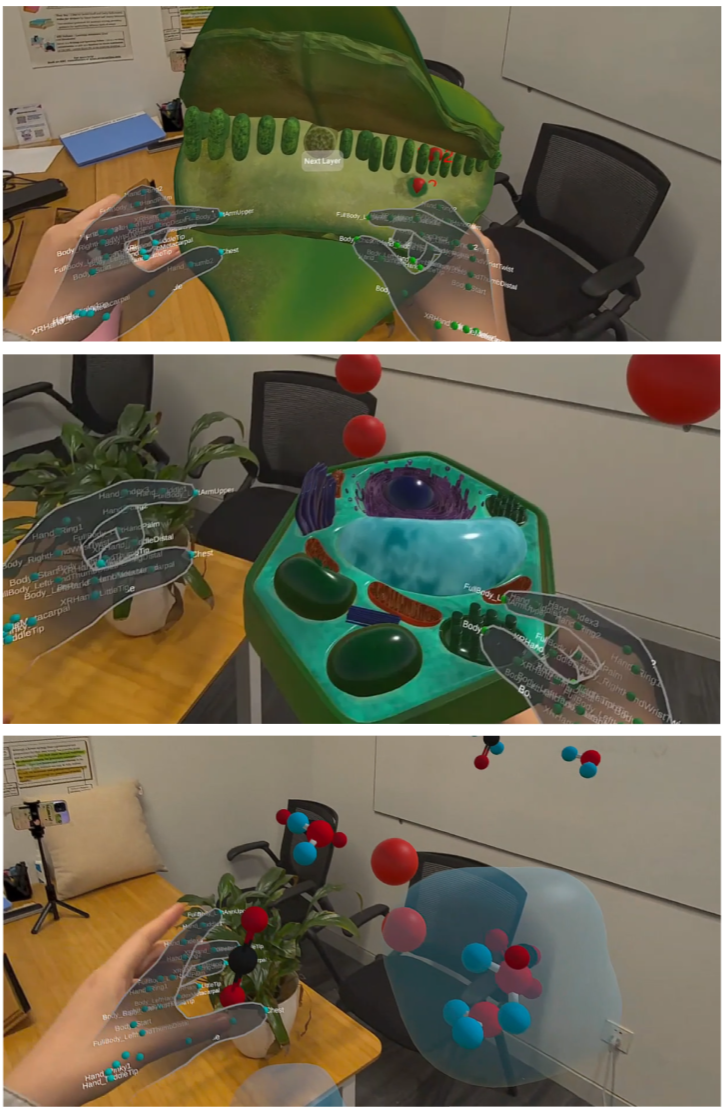

Demo 1: Journey Inside a Plant

A real plant serves as a portal to smaller dimensions. Through hand gestures, learners can zoom from leaf to cell, even down to the molecular level.

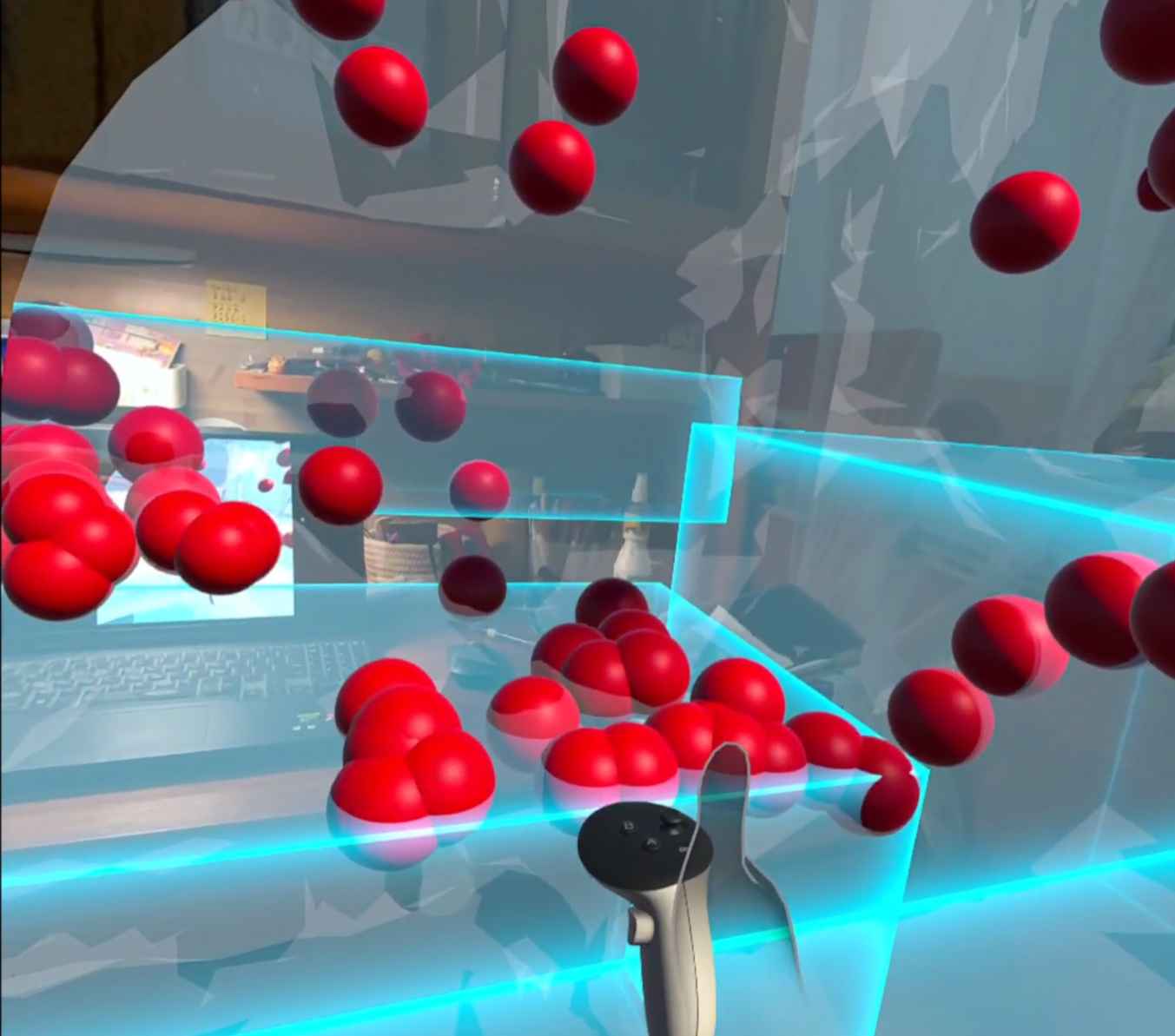

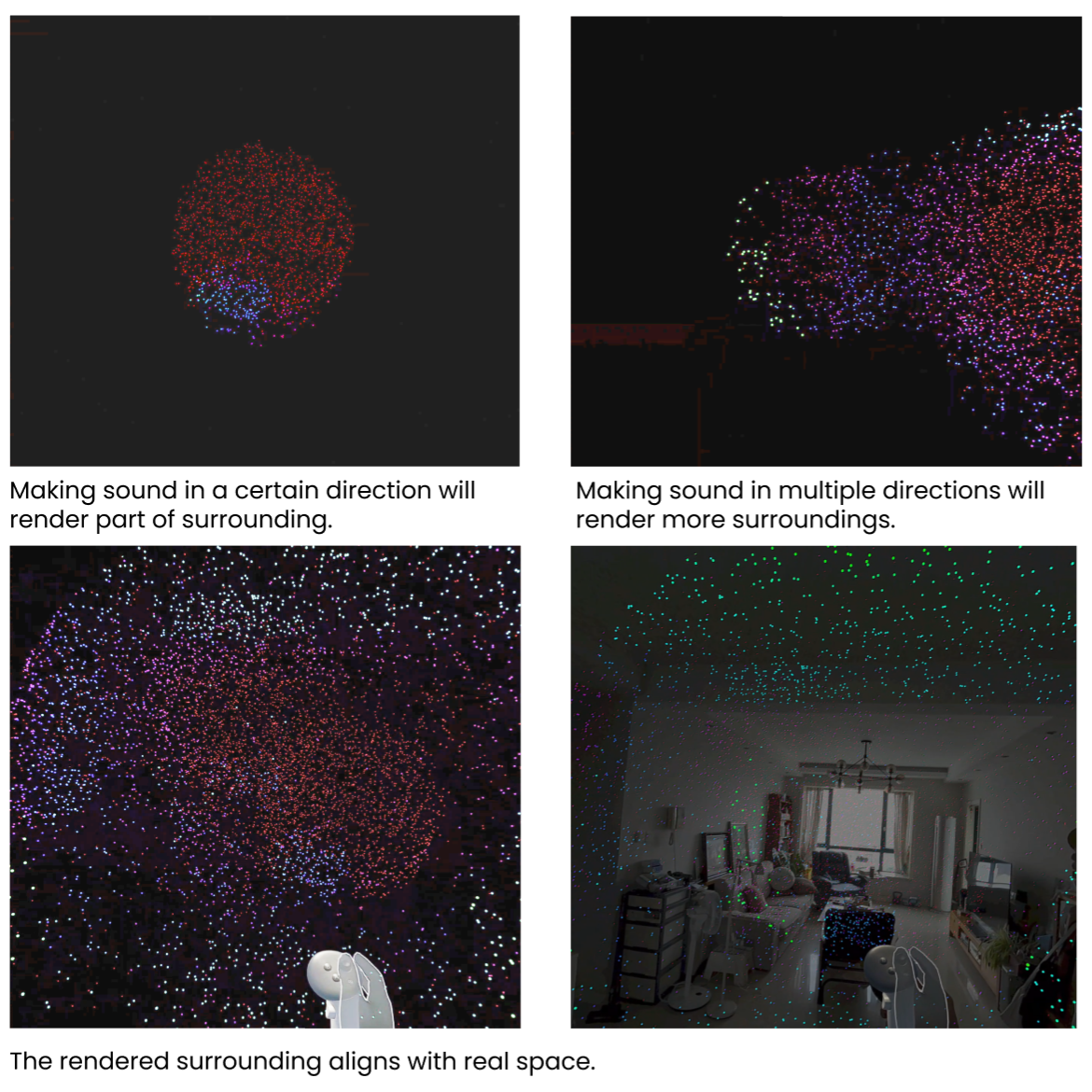

Demo 2: Bat Vision

Learners perceive their surroundings through a bat’s perspective by making sound in different directions. Their vocal input is captured in real time, mapped via ray-casting and rendered as point-cloud that reconstructs the space.

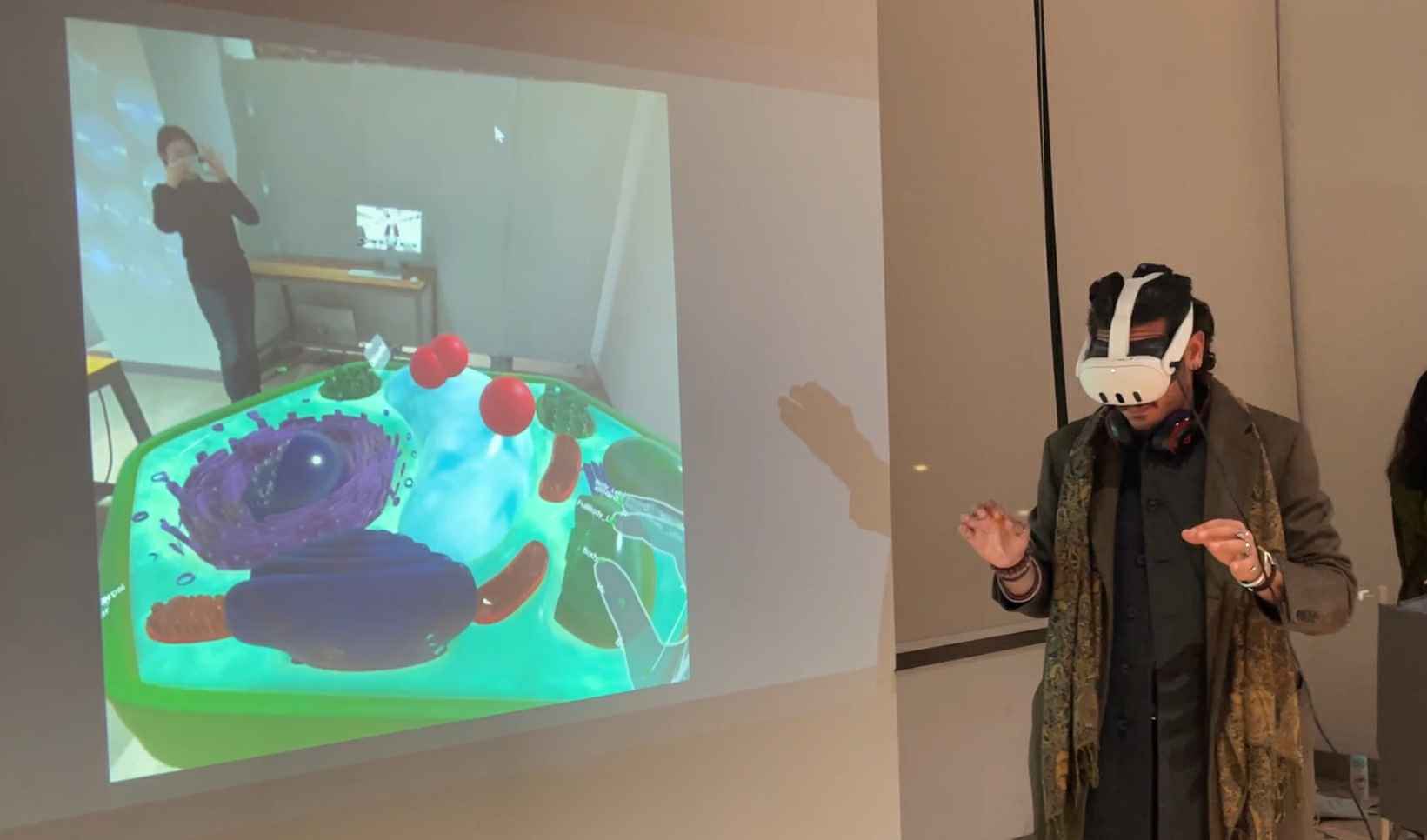

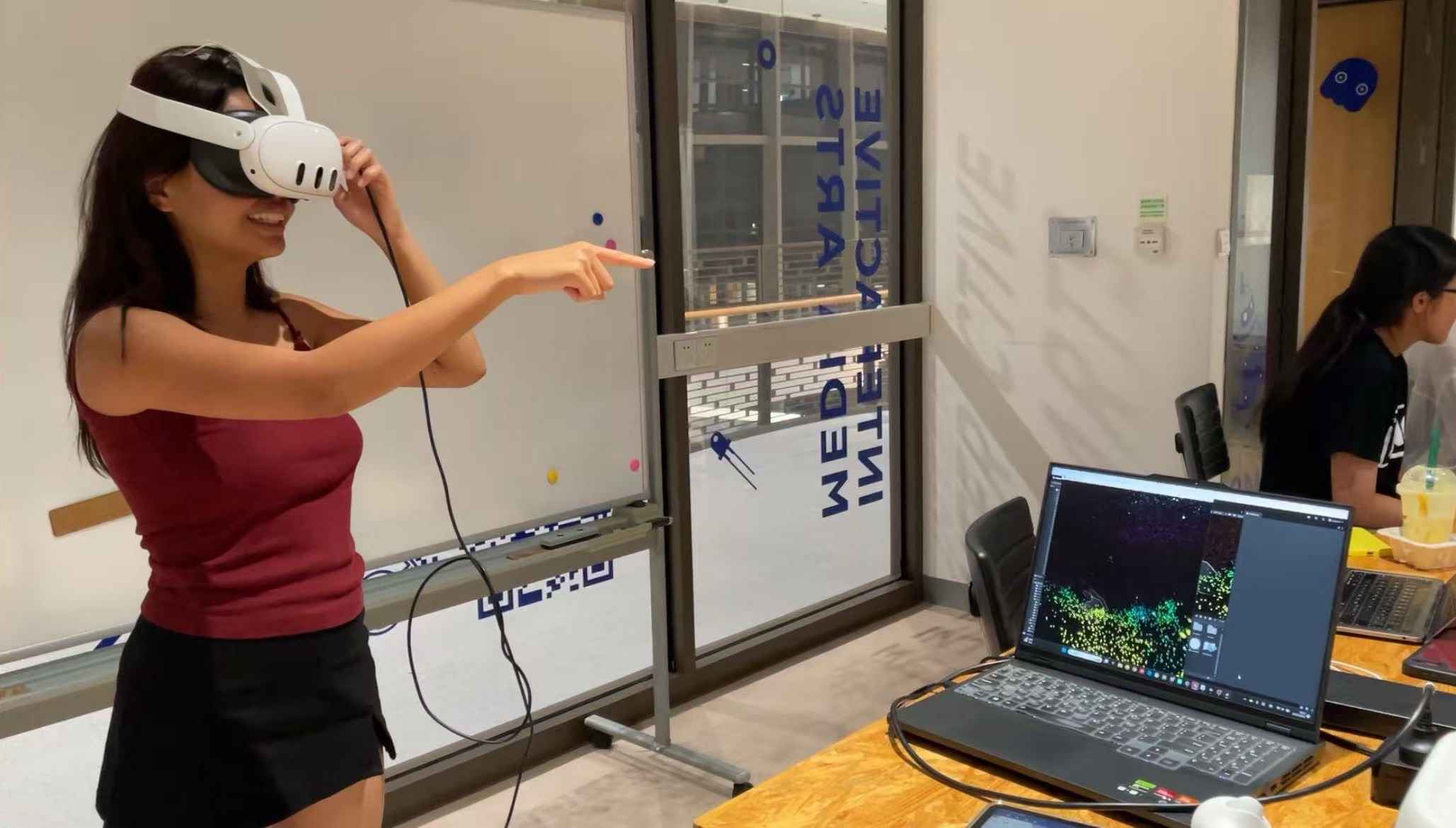

Workshop Images

Audience exploring the journey inside of a plant

Teaching children to interact with the project

Audience exploring bat vision

User Feedback

“This project is very fun and practical, I wish I could have this in my high school class! I can see great potential in putting this piece into application in middle school or high school.” -- Maelyn, IMA Student

“The project is designed so well and the learning process is really solid in it. The experience of zooming and dimension switching in the AR is so great that it gives the audience an even more immersive feeling.” -- Jean, IMA Student

“The concept is really interesting and meaningful! I love being put into bat’s shoes to sense the world, it really gives me an alienated feeling and makes me reflect on how the world could be perceived differently.” -- Lisa, IMA Student

Concept

Invisible Worlds emerges from a frustration with how traditional education flattens complex phenomena into static diagrams and memorized definitions. We learn about photosynthesis, but never got to see the processes happening inside a plant. We know bats navigate with sound without ever experiencing their perception of space. This project breaks that disconnection by reimagining how we interact with the world to learn.

My goals include the following:

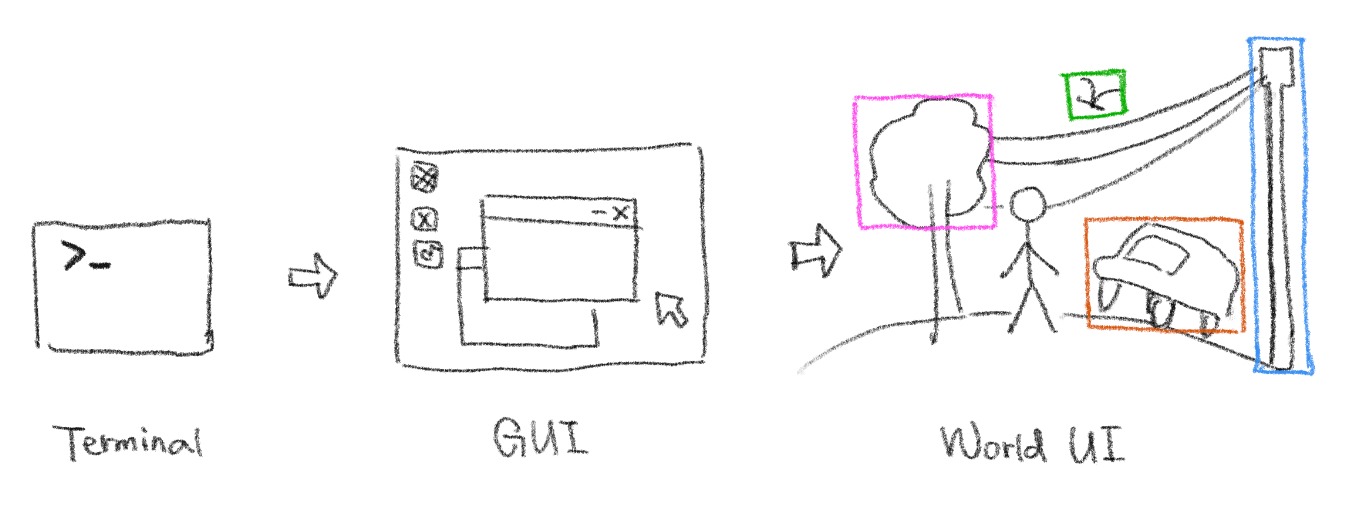

1. Reimagine Interfaces:

Ever since the creation of the concept "interface", we've experienced text-based interface (terminal), graphical interface (screen), and more recently, spatial interface (extended reality). The spatial interface gives us a lot of new possibilities, but meanwhile in many XR applications, the means of interaction are still limited to toggling some GUI or information cards in the 3D world, or attaching some 3D objects to the anchors detected. To me, the potential of spatial interfaces are yet to be unlocked. I want to build an experience where we can seamlessly enter another "world" through physical objects with the help of XR. Countless objects become countless "portals" that come together to form the world as an interface.

2. Shifting Perspective:

I was initially inspired by The Magic School Bus, where Ms. Frizzle teaches her class through adventures. Instead of reading about an object, they enter it, and experience how it works from the inside out.

Rather than passively transmitting knowledge from a textbook/slides/lecture in a one-directional way, I want to pass knowledge, or more precisely, the awe or curiosity for knowledge, through interactive experiences. I believe that Extended Reality can offer a special embodied experience. Beyond conveying facts, the project cultivates curiosity and empathy by letting learners embody experiences beyond human physical limits, exploring diverse scale, perception, physical law, and dimensions that humans can't normally reach.

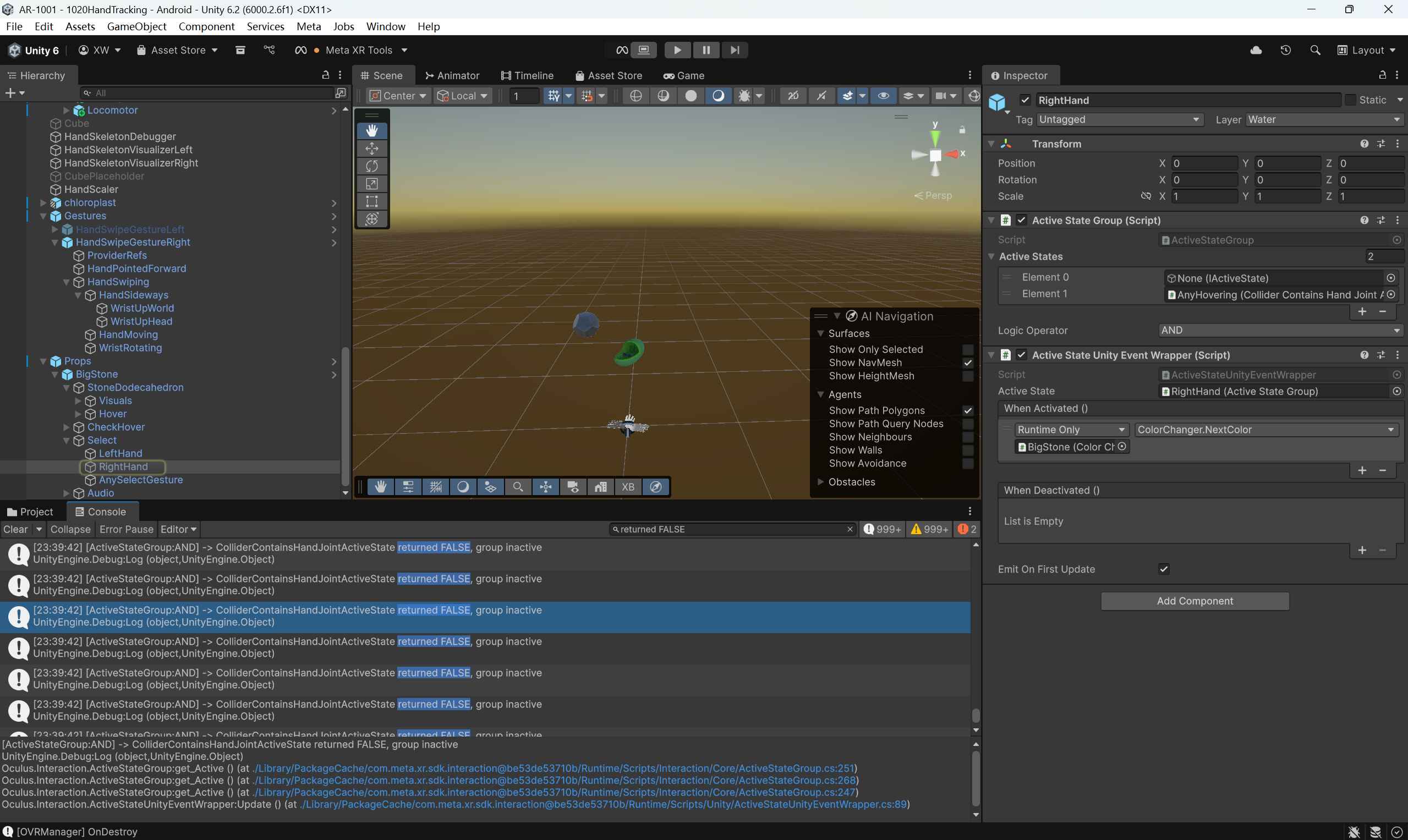

3. Technology Choice: Mixed Reality

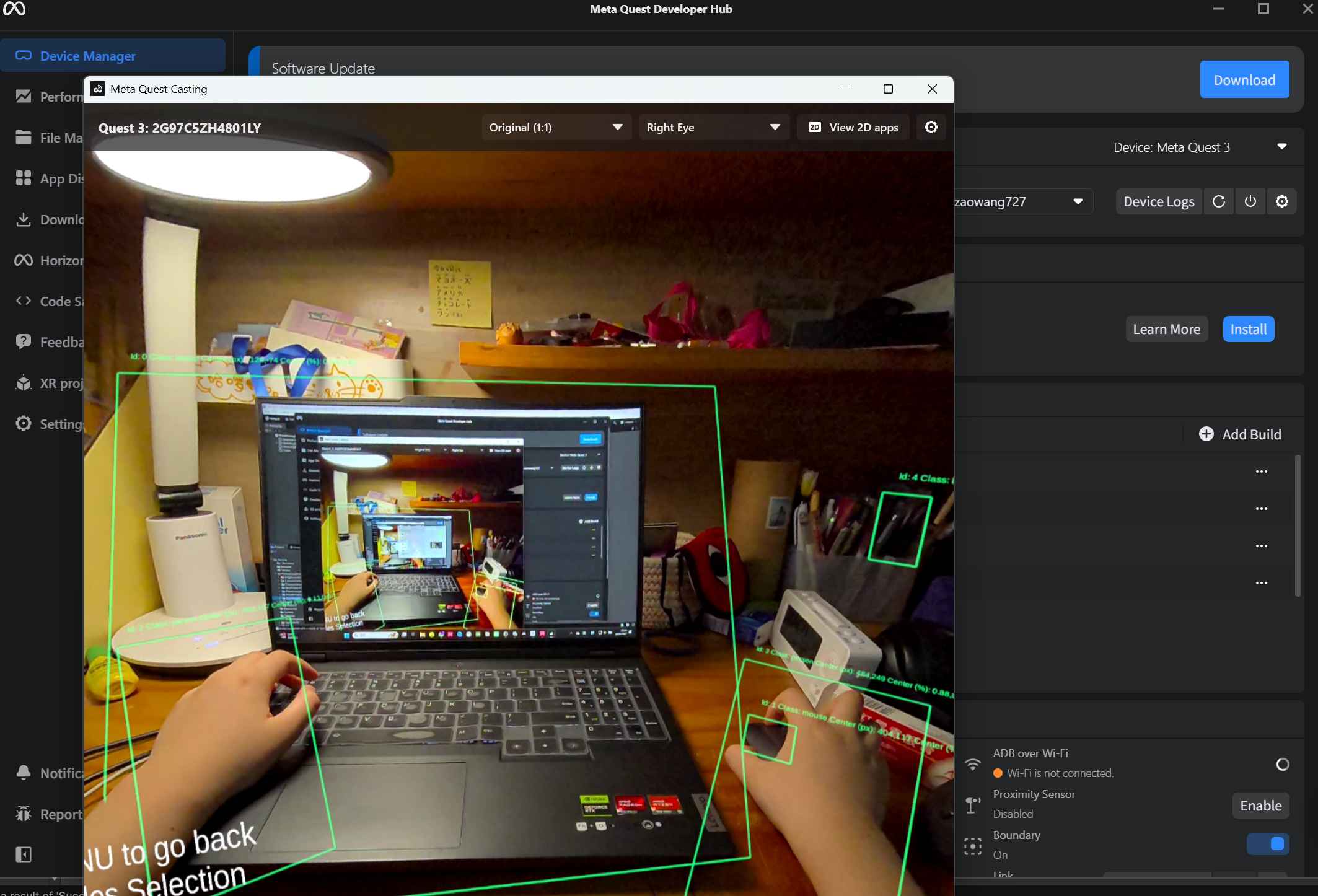

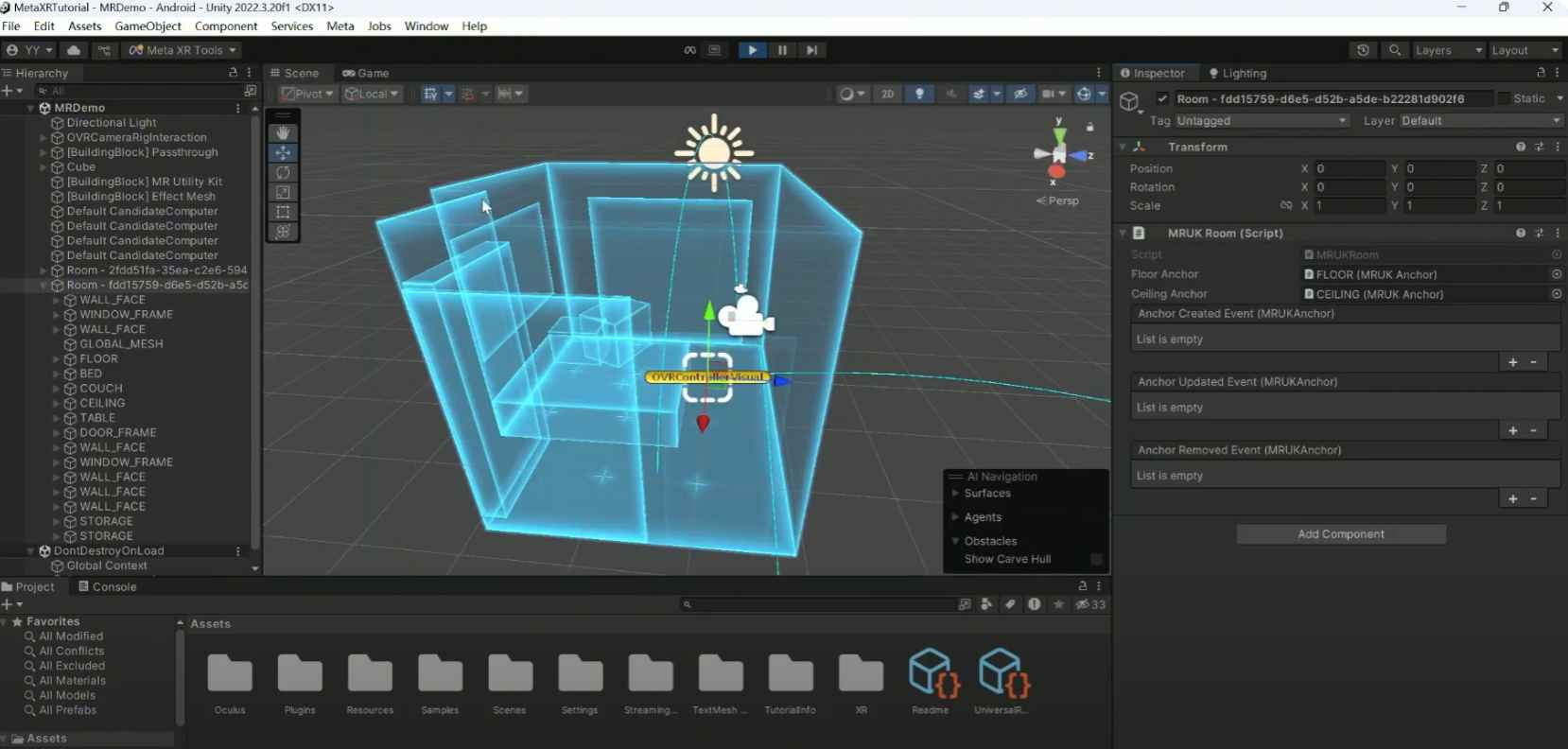

Built in Unity with Meta Quest hand tracking and passthrough, the system leverages Mixed Reality to seamlessly merge the physical and virtual worlds. MR ensures interactions are embodied, spatially grounded, and immediate, making the world itself the playground and teacher.

Design

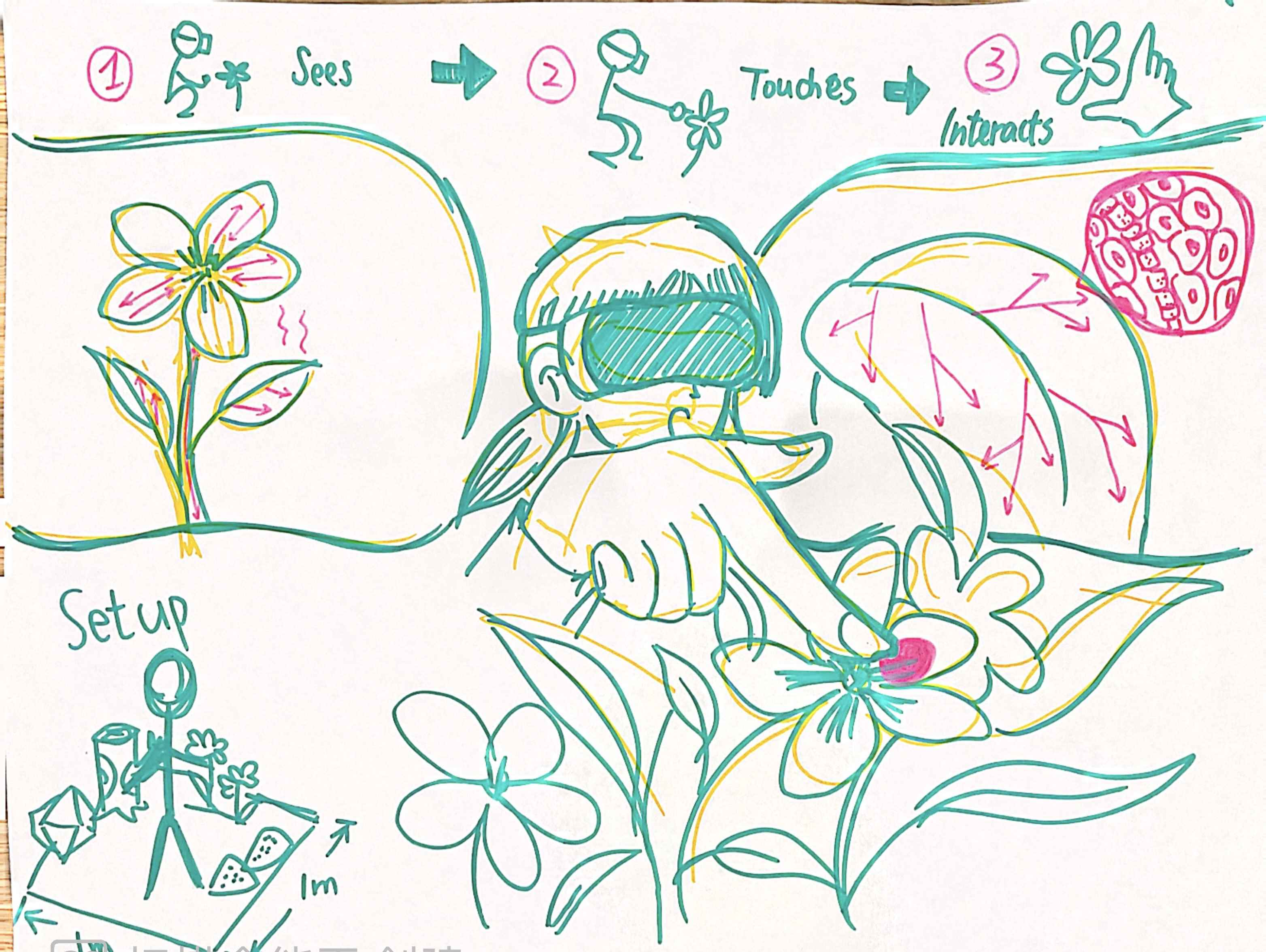

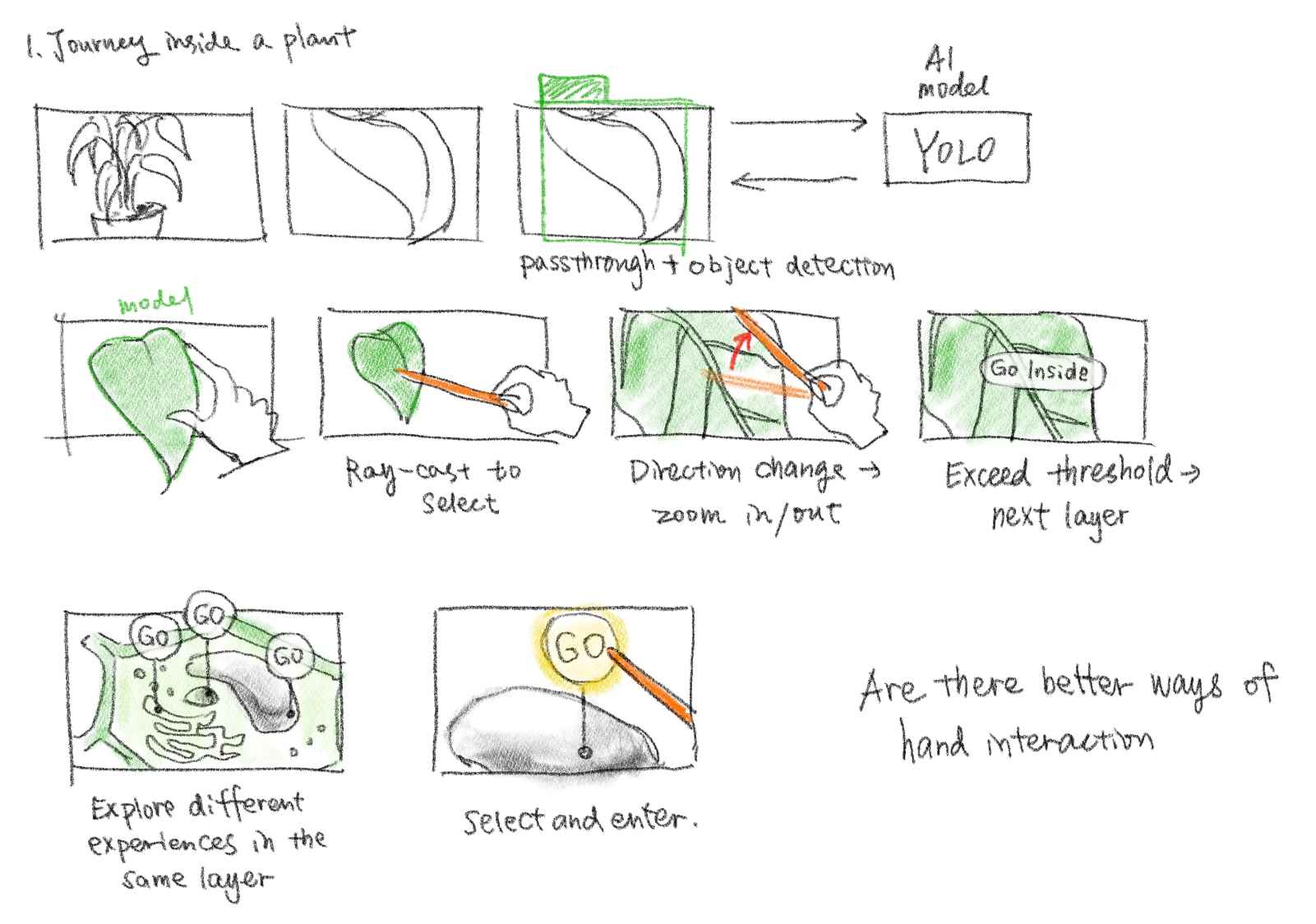

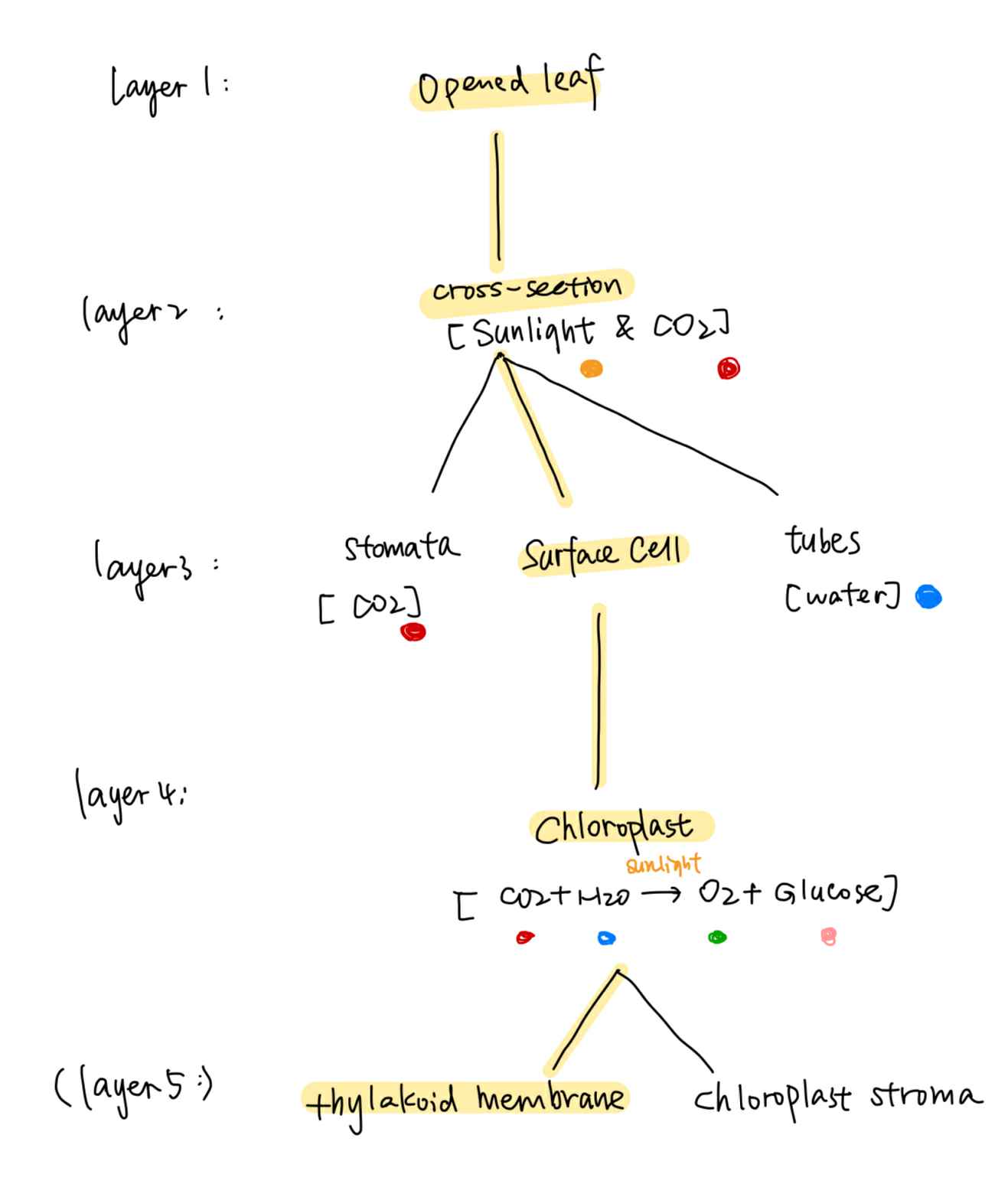

1. Journey Inside a Plant

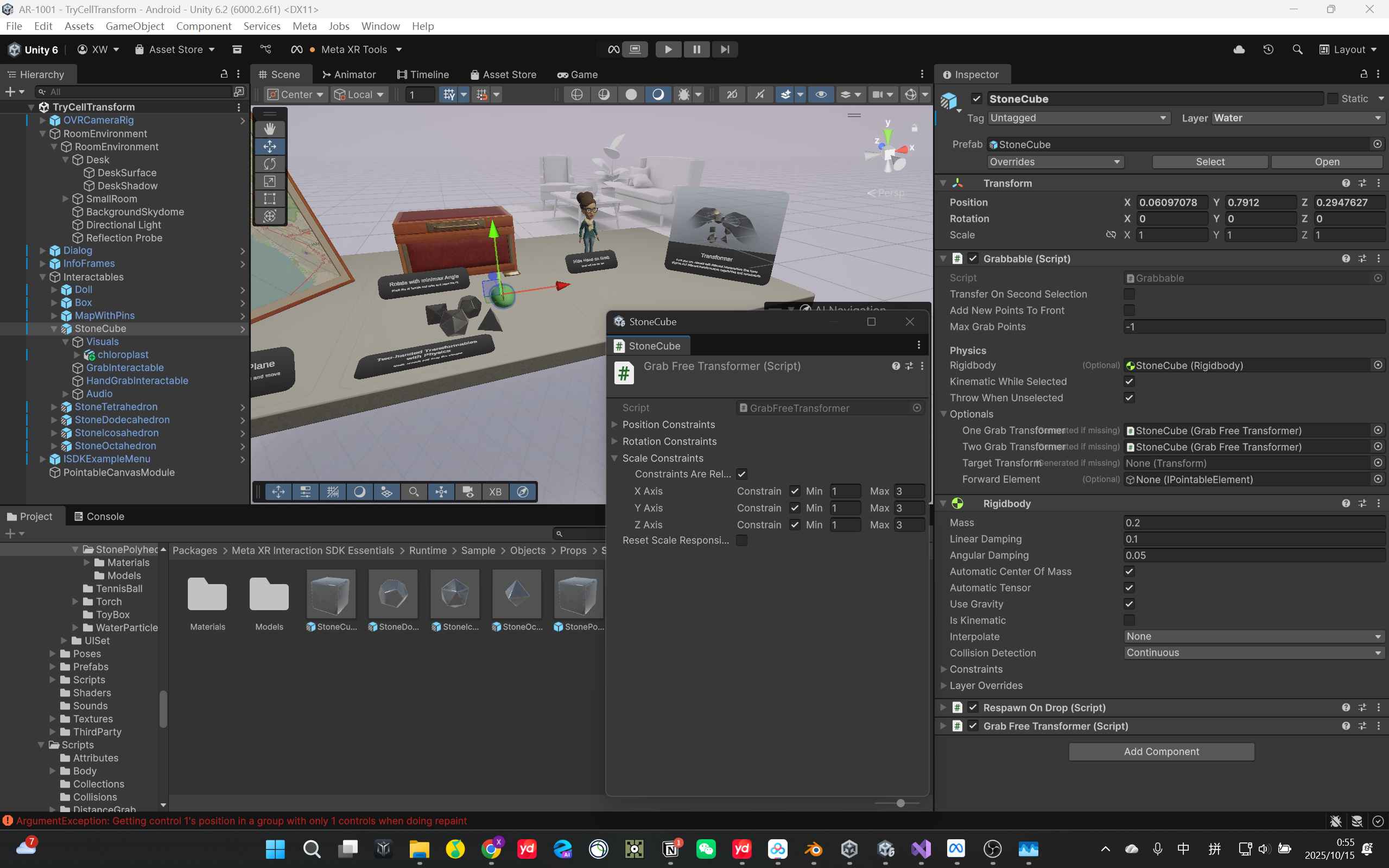

It enables a user stand in front of a real plant, pinch their fingers, and physically “zoom” through layers of biological structure, from leaf, cell, chloroplast, all the way down to molecules. Several interactive mini-games are in the way: For example, users can guide CO₂ and water molecules together to create sugar and oxygen molecules, turning the abstract idea of photosynthesis into something tactile and playful.

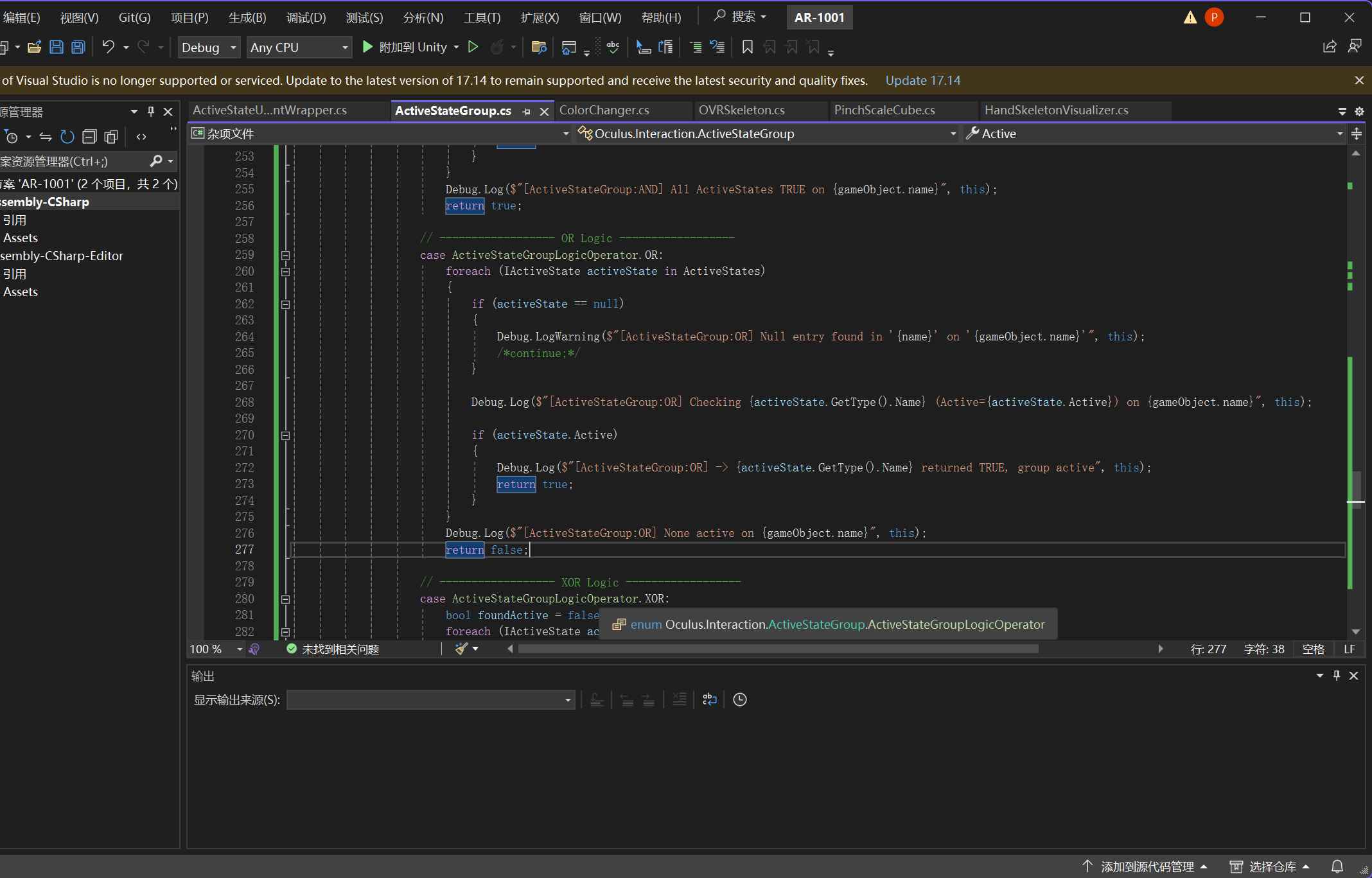

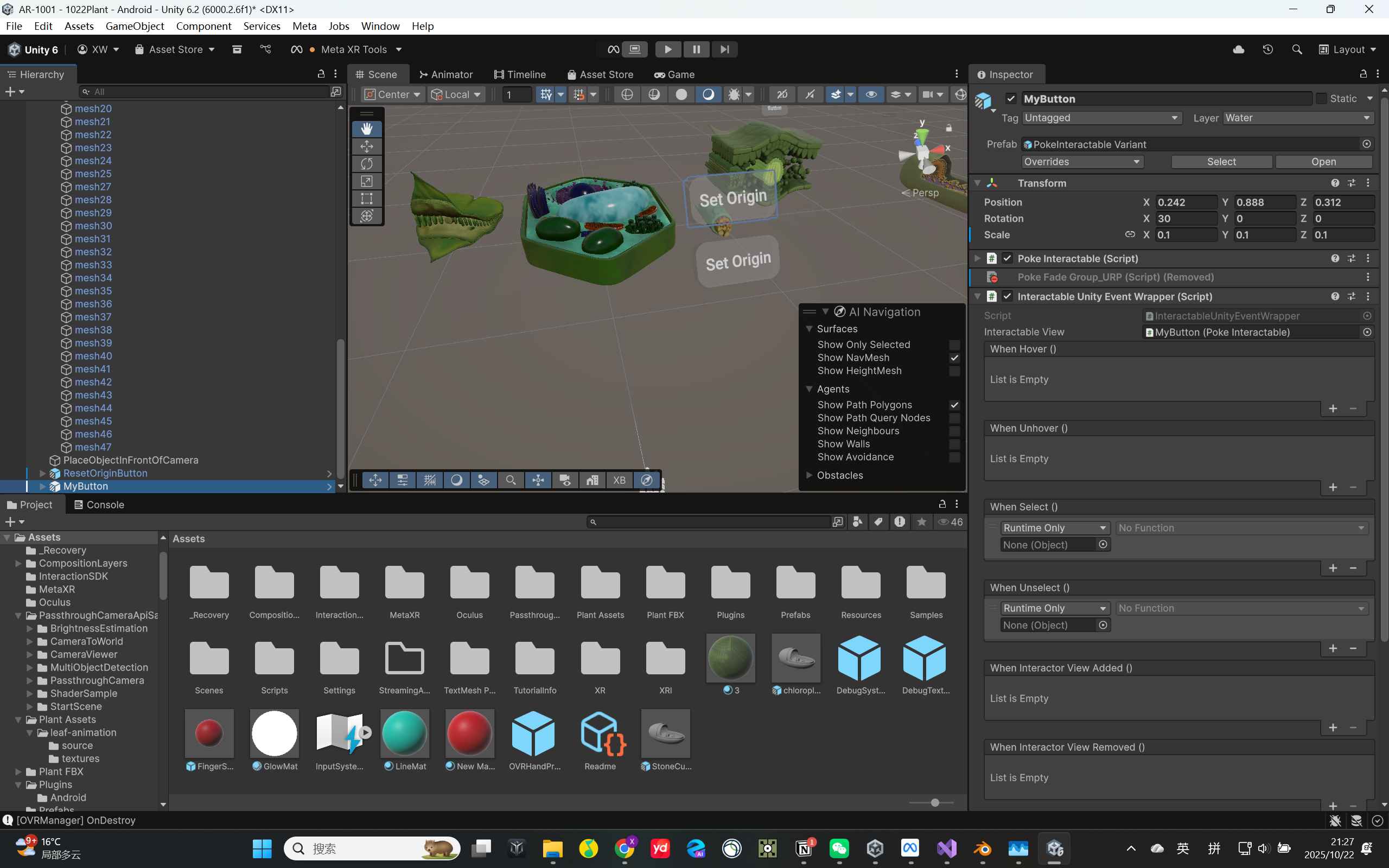

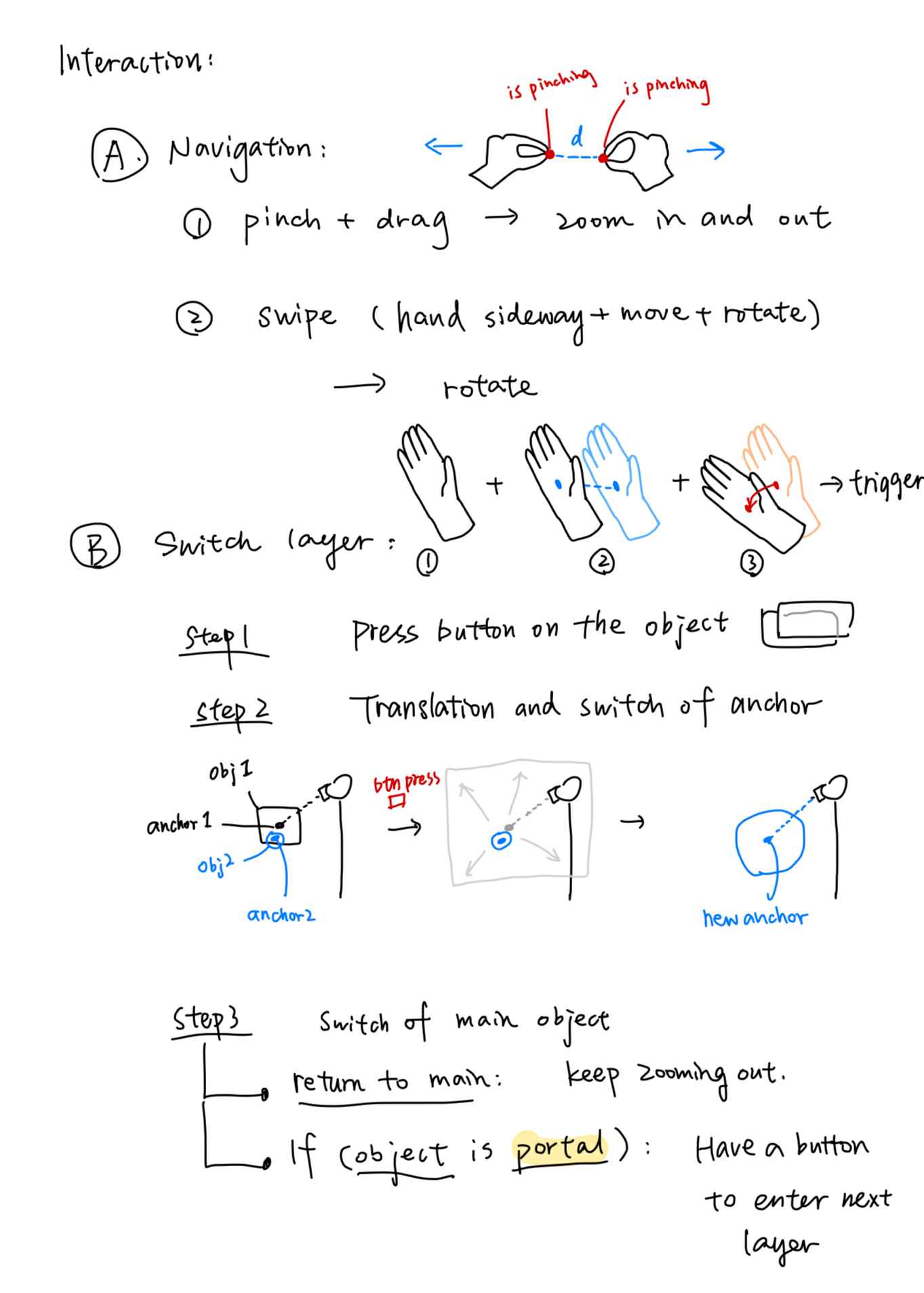

I built customized spatial control in Unity with C# scripting, including grab, pinch-and-drag to scale, swipe-to-rotate, poke, raycast-to-place-objects and so on.

To see more details, please scroll down to the Development Section.

Interaction Details:Navigating different dimensions through XR spacial controls.

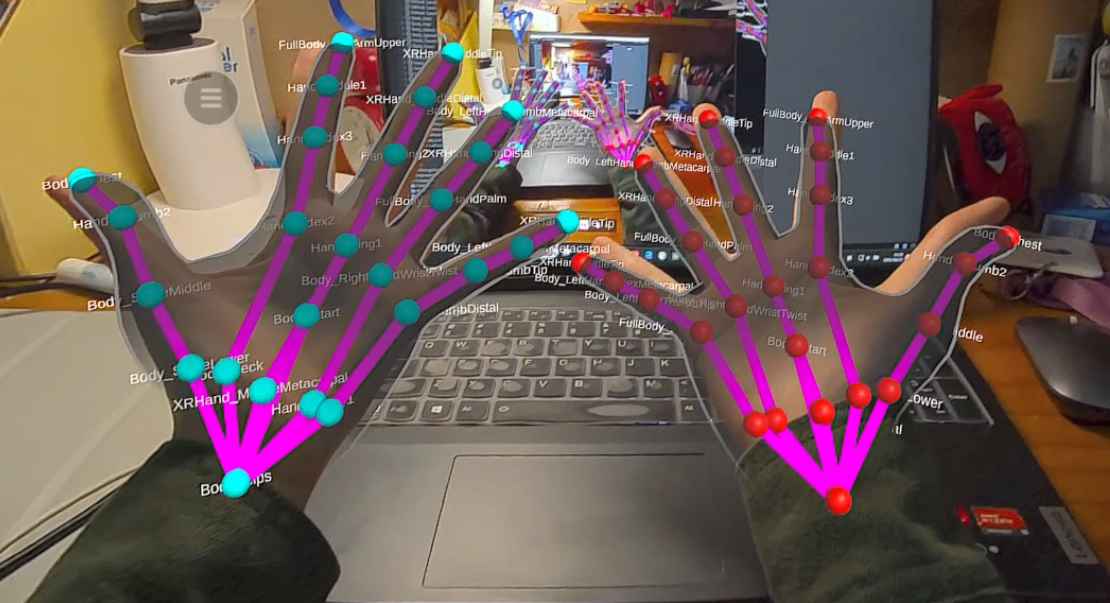

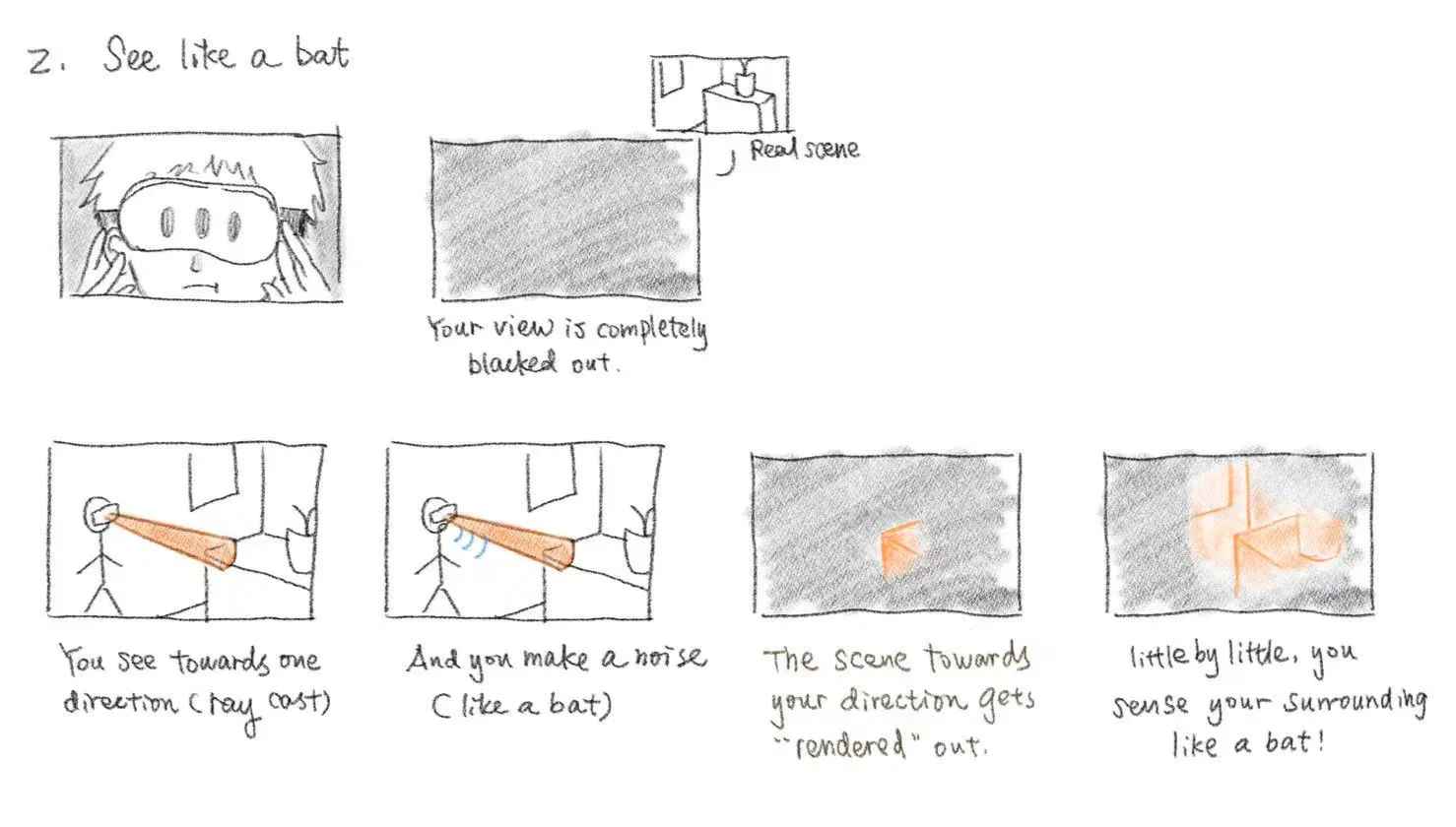

2. Bat Vision

Users experience space through a bat’s perspective, sensing the surrounding by making sound in a certain direction.

Their vocal input is captured in real time, mapped via ray-casting to simulate echolocation, and rendered as point-cloud that to simulate echolocation, and rendered as point-cloud that reconstructs the surrounding.

User Interaction Design: Technical Implementation:

Technical Implementation:I developed the space-sensing interaction through the Depth API and Raycast API offered by MR Utility Kit. See details in Development section.

Interaction Details:

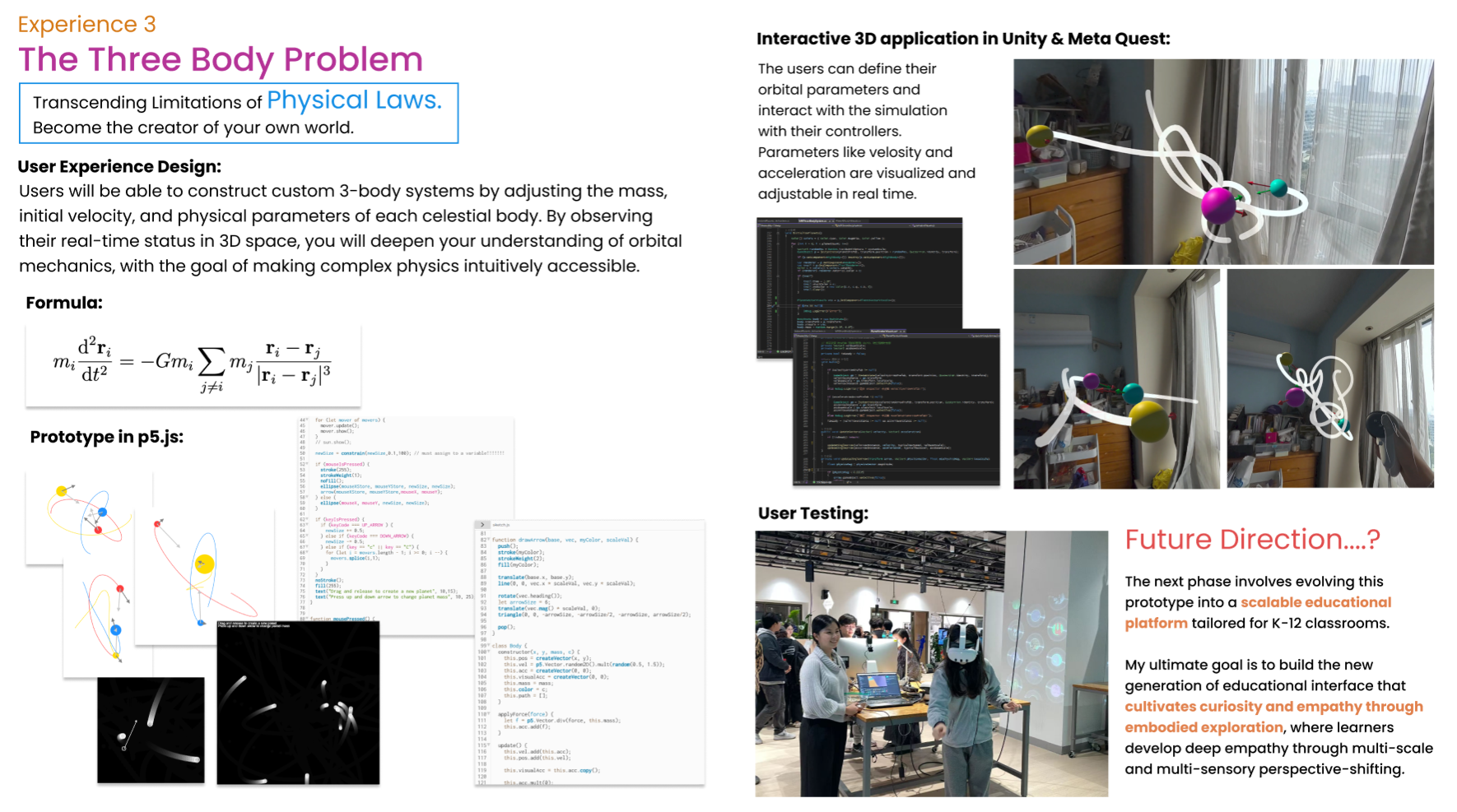

3. The Three-body Problem (In progress)

Development