A CS research on Automated Assessment of AI-Generated Explorable Explanations.

Abstract

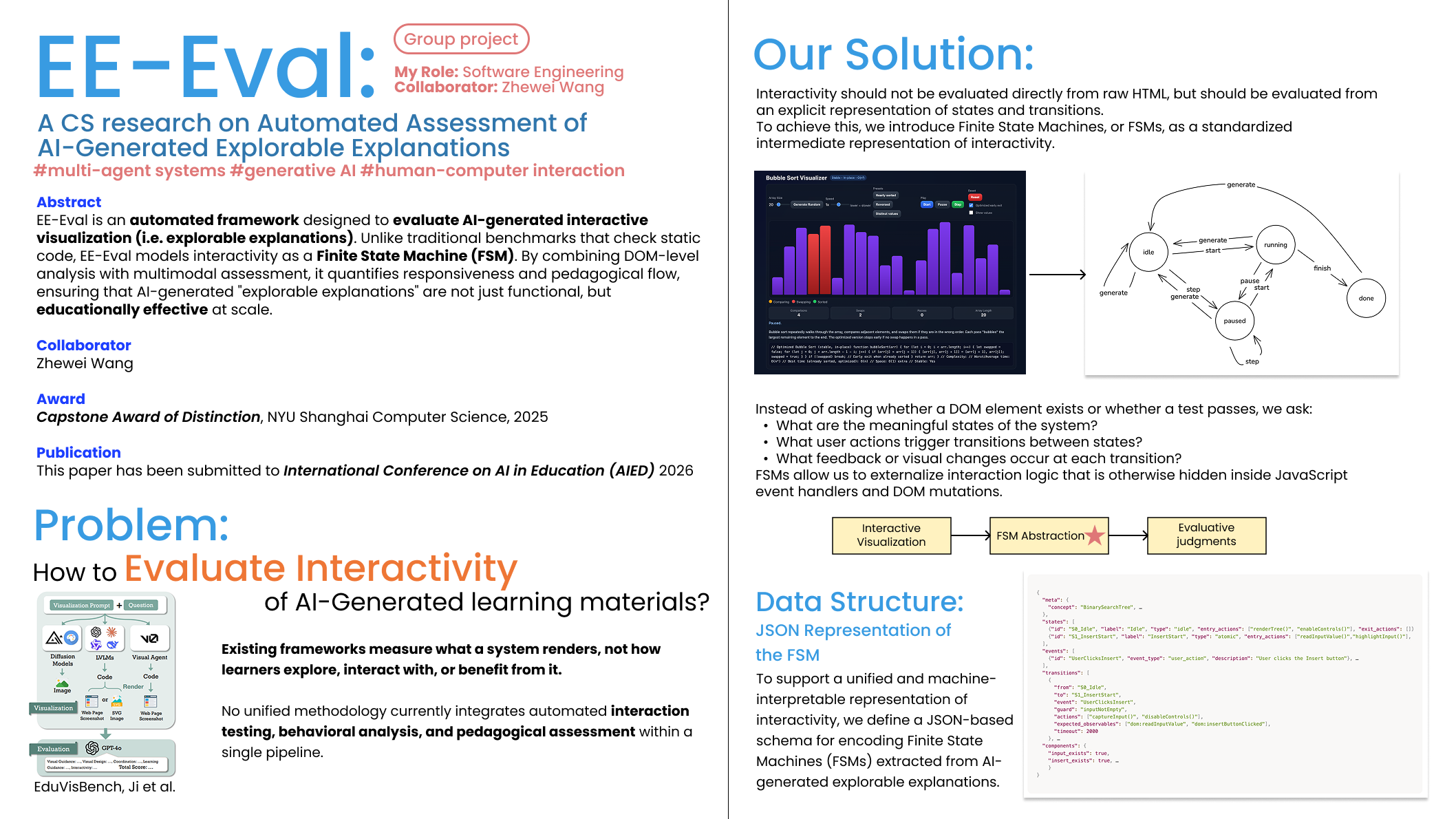

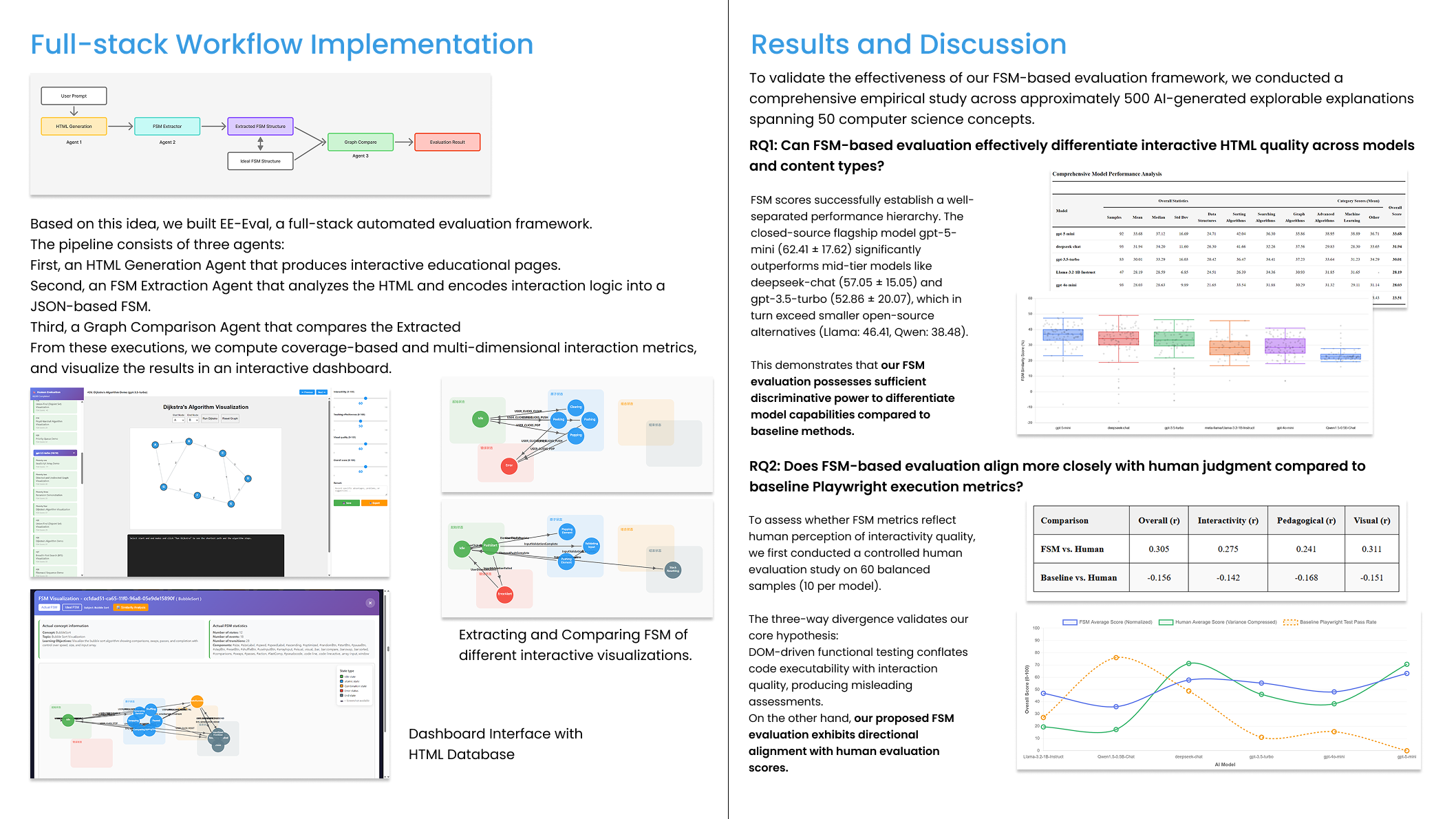

EE-Eval is an automated multi-agent pipeline for evaluating AI-generated interactive learning materials. Existing evaluation benchmarks focus on static properties such as code correctness or visual quality, but fail to assess interaction quality, or whether an interactive system actually supports meaningful learning.

EE-Eval addresses this gap by modeling interactive learning materials as a new data structure based on finite state machines. Through a full-stack pipeline, it enables systematic testing between transition states to assess whether the interaction flow aligns with pedagogical goals.

This project is a continuation of research from Interactive Neural Networks.

Toolkit

Javascript, Python, Machine Learning, Full-stack Development

Award

Capstone Award of Distinction

NYU Shanghai Computer Science, 2025

Publication

This paper has been submitted to International Conference on AI in Education (AIED) 2026

My Role

Software Engineering, Research

Collaborator

Zhewei Wang

Links

Project Overview